Selected articles to help beginners quickly understand spike cameras and the SpikeCV framework.

Author: Tiejun Huang

Published in: Journal of Electronics (电子学报)

Introduces the principle of spiking continuous imaging and demonstrates its advantages in ultrahigh-speed and high-dynamic-range scenarios.

Authors: Yajing Zheng, Jiyuan Zhang, Rui Zhao, Jianhao Ding, Shiyan Chen, Weijian Wu, Ruiqing Xiong, Zhaofei Yu, Tiejun Huang

Published in: SCIENCE CHINA Information Sciences

An overview of SpikeCV as an open-source framework for continuous vision, highlighting its ecosystem, algorithms, and applications.

Authors: Yajing Zheng, Lingxiao Zheng, Zhaofei Yu, Boxin Shi, Yonghong Tian, Tiejun Huang

Published in: Proceedings of CVPR 2021

Presents the principle of spike camera reconstruction and provides a comparison with another popular neuromorphic sensor, the event camera.

Authors: Jiyuan Zhang, Yajing Zheng, Zhaofei Yu, Tiejun Huang

Published in: Strategic Study of CAE (中国工程科学), 2024

Focuses on spike-based visual research for autonomous driving, exploring how spike cameras can enhance perception in dynamic and safety-critical scenarios.

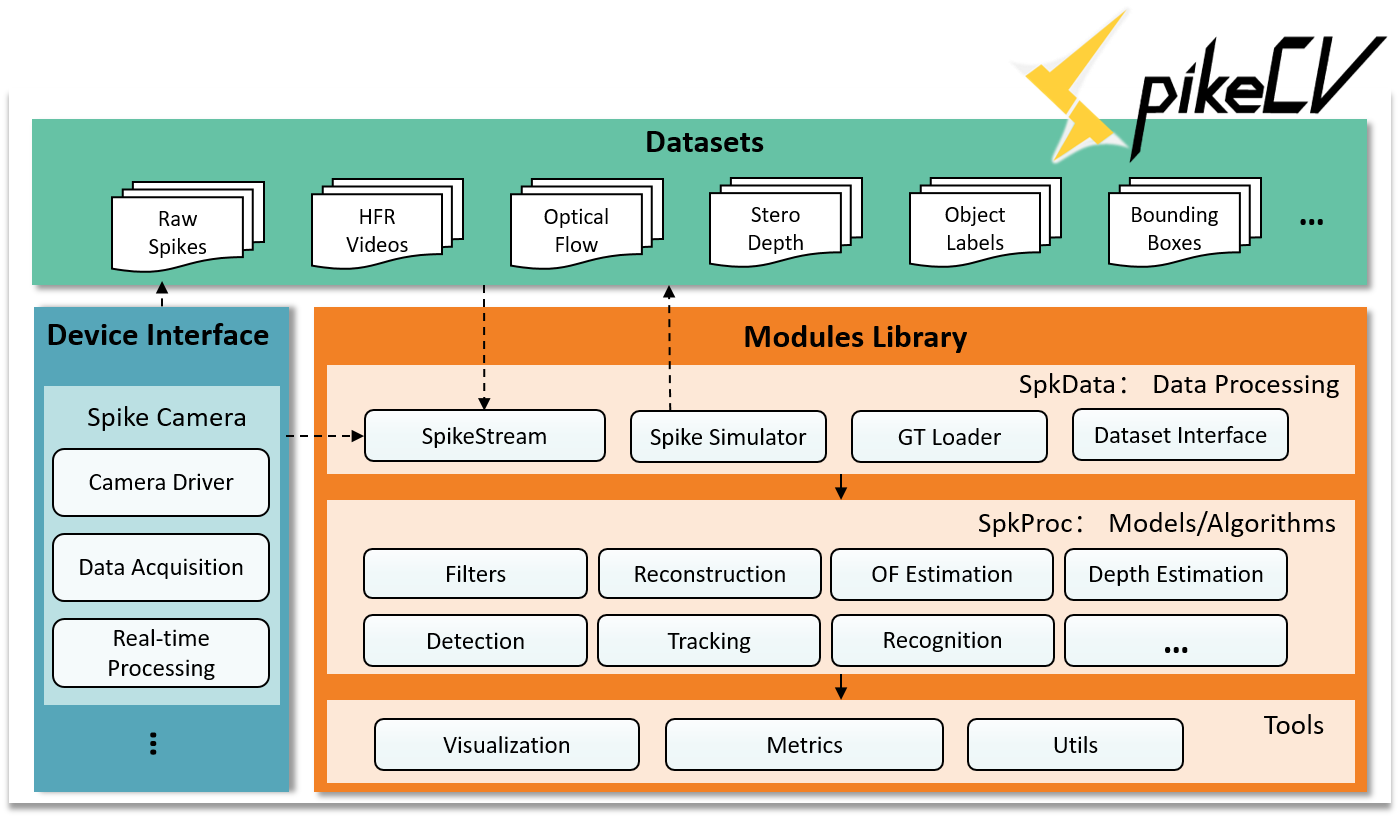

SpikeCV provides a class SpikeStrem to encapsulate the spike data, normalized dataset interfaces with standard paramter passing modes, and a comprehensive modularization, making it easy for developers to customize and improve algorithms.

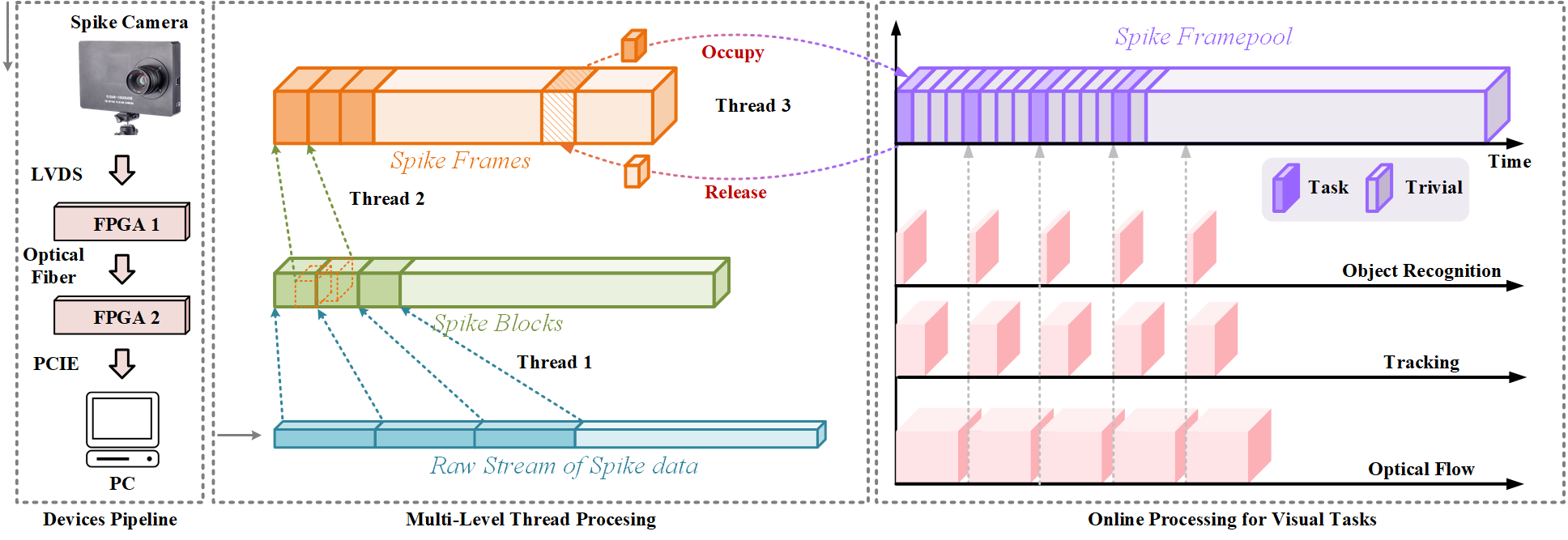

For real-time data reading, SpikeCV has a multi-level thread for reading spike data at high temporal resolution based on multiple C++ thread pools. For real-time inference, The encapsulated SpikeStream instance can be supllied to multiple parallel threads of different models.

SpikeCV not only provides spike processing tools and spike-based visual algorithms but also provides spike camera hardware interfaces and normalized spike datasets. Beginners can thoroughly learn what spike data is and how to use spike cameras to tackle visual tasks.

We aim to synchronize the acquisition of data and the process of algorithms in a real-time scheme in the SpikeCV. The pipeline can parse user-friendly spikes from raw outputs of the hardware and process visual tasks in real time, consisting of two parts, multi-level threads for reading real-time spikes and online applications.